Impact Factor : 0.548

- NLM ID: 101723284

- OCoLC: 999826537

- LCCN: 2017202541

Robert Skopec*

Received: June 27, 2018; Published: July 11, 2018

*Corresponding author: Robert Skopec, Analyst-Researcher, Dubnik, Slovakia

DOI: 10.26717/BJSTR.2018.06.001398

There are several spheres of underdevelopment in recent brain research. Brain research (BR) is too oriented to macro-states findings, ignoring the process of reentry between macro-states and micro-states, especially is not reflecting the macro-states influence on internal representations at micro level (links to quantum physics). There is a tendency to empirical isolationism, unilateral concentration only to the system, not studying equally the mechanisms of interplay between system and the environment. We are underestimating the informational impact of a probability factor to the brains behavior. Mathematical approach as a pure method of the artificial intelligence is not enough implemented, despite this, we believe with A. Einstein, that the mathematics is a language of God.

The consciousness is a mathematical structure, with neurobiological semantics. The higher mental processes all correspond to well-defined but, at present, poorly understood information processing functions that are carried out by physical systems, our brains[1].The consciousness is the result of a global workspace (GW) in the brain, which distributes information to the huge number of paralell unconscious processors forming a rest of the brain. The GW is composed of many different paralell processors, or modules, each capable of performing some task on the symbolic representations that it receives as input [2]. The moduls are flexible in that they can combine to form new processors capable of performing novel tasks, and can decompose into smaller component processors [3].

Global workspace system at the neurobiological level may consist from two modular subsystems:

a) The first is a processing network of the coputational space 1S,composed of a net of parallel, distributed and functionally specialised processors (from primary sensory processors – area V1, unimodal processors – area V4, heteromodal processors – the resonant mirror neurons in area F5) [4]. They are processing categorical, semantic information. The reality is a theory arising from interferrence of computed inputs compared operatinally in GW with a resonant pre-representation. The interferrence product is therefore “true” [5].

If V is a physical system in interaction with another system W. Kochen´s standard state vector Γ(t) of the two interacting systems has a set of polar decompositions: Γ=kqkϕkΣ⊗φk with the qk complex. They are parametrized by the right toroid T of amplitudes q=(qk)k and comprise a singular bundle over S, the enlarged state space of U = V+W. The evolution of q is determined via the connection on this bundle. Each fieber T (axons, dendrites) has a unique natural convex partition (p1,p2....) yielding the correct probabilities, since the circle of unit vectors which generate the ray corresponding to the state of Γ, and intersects jpin an arc of length 2jq. In this interpretation, rays in Hilbert space correspond to ensembles, and unit vectors in a ray correspond to individual member plane waves of such ensemble. An element of the fieber ΡΓabove vector Γ in the unit sphere S of Η. This fieber can be thought as an information carrier of the set all the possible complex polar decomposition of Γat synapse:

where the qkC∈, and the Γk are bi – orthonormal. Thus each Γk is of the ϕk⊗ϕk where the φk and the ϕk are orthonormal [6].

The second is the projective modular system of a global workspace S2, consisting of a set of cortical neurons characterized by ability to receive from and send back to homologous neurons in other cortical areas horizontal projections through long-range excitatory axons [7]. The populations of neurons are distributed among brain areas in variable proportions. It is known that long-range cortico-cortical tangential connections, and callosal connections originate from the pyramidal cells of layers 2 and 3, which give or receive the so called “association“ efferents and afferents [8]. These cortical neurons establish strong vertical and reciprocal connections, via layer 5 neurons, with corresponding thalamic nuclei, contributing to the direct access to the processing networks. These projections may selectively amplify, or extinquish inputs from processing neurons. The entire workspace is globally interconnected in a way, that only one workspace representation can be active in a given time [2].

The projective system (PS) is involved in the main computational part of the brain: the predictive metalearning system of neuromodulator circuits wthin the circuits of basal ganglia, which has been suggested [9] as major locus of reinforcement learning. For prediction functions are important also the connections of prefrontal cortex, thalamus, cerebellum, etc. In a computational theory on the roles of the ascending neuromodulatory systems, they are mediating the global signals regulating the distributed learning mechanism in the brain [10]. Dopamine represents the global learning signal for prediction of rewards and reinforcement of actions. The reinforcement learning in this computational framework is for an agent to take an action in response to the state of environment to be acquired reward maximised in a long run.

A basic algorithm of reinforcement learning could be considered a Markov decision problem (MDP) assuming a discrete state, action and time. The agent observes the state S(t)∈(s1 ,.....s n ) ,takes action a(t)∈ (a1,.....a n )according to its policy, given either deterministically as a = G(s) ,or stochastically P = (a|s) . In response to the agent´s action a(t) , the state of environment changes either deterministically as s(t) = F(s(t),a(t)) , or stochastically according to a Markov transition matrix P(s(t +1) s(t)| , a(t) for each action a(t) .A basic algorithm of reinforcement learning could be considered a Markov decision problem (MDP) assuming a discrete state, action and time. The agent observes the state S(t)∈(s1,.....s n ) ,takes action 1 ( ) ( ,..... ) n a t ∈ a a according to its policy, given either deterministically as a = G(s) ,or stochastically P = (a s) . In response to the agent´s action a(t) , the state of environment changes either deterministically as s(t) = F(s(t),a(t)) , or stochastically according to a Markov transition matrix P(s(t +1) |s(t) , a(t) for each action a(t) .

The reward r(t +1)∈R is deterministically r(t +1) = R(s(t), a(t)) , or stochastically P(r(t +1) s(t) , a(t)) ,[11,12] In this setup, the goal of reinforcement learning is to find an optimal policy maximising the expected sum of future rewards. The architecture for reinforcement learning is the actor-critic, consisting of two parts: The critic learns to predict future rewards in the form of a state value function V (s) for the current policy, The actor improves the policy P = (a |s) . in reference to the future reward predicted by the critic. The actor and the critic learn either alternately, or concurrently. The synaptic weights between two excitatory units are assumed to be modifiable according to a reward-modulated Hebbian rule Δwpost, pre =ε RS pre (2S Post −1) , where R is reward signal provided after each network response (R=+1, correct, R = -1, incorrect), pre is the presynaptic unit and post the postsynaptic unit [2]. The reward signal R influences the stability of workspace activity through a short-term depression or potentiation of synaptic weights: Δwpost, pre = 0.2(1− wpost, pre ) , where w´ is a short-term multiplier on the excitatory synaptic weight from unit pre to unit post [13].

Use of the naive Bayes classifier can be compactly represented as a Bayesian network with random variables corresponding to the class label C , and the components of the input vector X1...XM . The Bayesian network reveals the primary modelling assumption present in the naive Bayes classifier: the input attributes j X are independent given value of the class label C . A naïve Bayes classifier requires learning values for P(C = c) , the prior probability that the class label C takes value c , and P(Xj=x|C=c),j= x C = c , the probability that input feature j X takes value x given the value of the class label is C = c [14]. The feature selection filter in the perception can be used the naïve Bayesian classifier based on the empirical mutual information between the class variable and each attribute variable [15]. The mutual information is computed during the learning process learning.

To predict the value of a ray given the profile r a of a particular active user a we apply a slightly modified prediction rule to allow for missing values. The prediction rule is

Kurt Gödel has pointed out, that predictions are like a perception

of the objects of set theory. Prediction is a mode of mathematical

intuition, which in sense of perception induces building up theories

of the future. The given underlying mathematics is closely related

to the abstract elements containd in our empirical ideas. The

brain seems to have internal theories about what the world is

like. Between [6] brains theories is a internal perceptual rivalry in

Darwinian sense. The World as a quantum system can be described

due the polar decomposition, as a whole system consisting from

two subsystems, which are mutually observing one another.

During this observation the global workspace is processing reentry

between internal representations and influence functional of the

environment, between left and right hemisphere, etc [3]. Some

authors are proposing to consider a jth of k elements subset

(Xkj) taken from isolated neural system X, and its complement

X-Xkj (Edelman, 1998)[R]. Interactions between the subset

and the rest of the system introduce statistical dependence

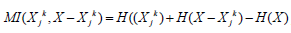

between the two. This is measured by their mutual information  which captures the extent to

which the entropy of Xkj is accounted for by the entropy of X − Xk

and vice versa (H indicates statistical entropy) [16].

which captures the extent to

which the entropy of Xkj is accounted for by the entropy of X − Xk

and vice versa (H indicates statistical entropy) [16].

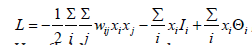

Signalling between neuronal groups occurs via excitatory connections linking cortical areas, usually in a reciprocal fashion. According to the theory of the neuronal group slection, selective dynamical links are formed between distant neuronal groups via reciprocal connections in a proces called „reentry“ [17]. Reentrant signalling establishes correlations between cortical maps, within or between different levels of the nervous system. It is more general than feedback in both connectivity and function in that is not simply used for error correction or cybernetic control in the classical sense. [18] The reentrant interactions among multiple neuronal maps can give rise to new properties. [19,20]. The origin of slow brain waves due to „pulling together of frequencies“ [21]. Many neural network models are developed to stimulate some features of human brain like recognition and learning. Each node connected to all others nodes, wij denotes the strength of the influence of neuron j on neuron i , the sum of an external input and the inputs from the other nodes, Ii is external input on node i .[22]. The total input on node

In the Hopfield model as Auto-associative Memory insight the computation means covergence to fixed poits. If ij w = 0, neurons dont´t feel themselves, if wij = wji ,there are symetric interactions (unlikeli to hold in real brains), or only one node is updated at a time (asynchronous dynamics). The system has a Lyapunov function, which he referred to as the „energy function“ [23]. This is a useful trick for analysing dynamical systems, as it can be used to prove convergence to a fixed points. If there exist a Lyapunov function, the dynamics always converges to a fixed point. Hopfield thought of this as a physics problem: he called the Lyapunov function the „energy“, and thought of the dynamics of the network as a physical system evolving to the state of lowest energy. So wij = wji , is like the law „for every action is an equal and opposite reaction“ [16]. Lyapunov function is satysfying the definition above:

where

Θi is a threshold for node

i . Hopfield proposed a neuron dynamics which minimize the

following energy function H = −Σdij σi σj .Where σi

is the state of

the ith neuron of the network and ij d is the synaptic strength

between neurons i and j . (Belifante, 1939)

where

Θi is a threshold for node

i . Hopfield proposed a neuron dynamics which minimize the

following energy function H = −Σdij σi σj .Where σi

is the state of

the ith neuron of the network and ij d is the synaptic strength

between neurons i and j . (Belifante, 1939)

The Hopfield model of associative memory has provided fundamental insights into the origin of neural computations. The physical signifikance of Hopfield´s work lies in his proposal of energy function and his idea that memories are dynamically stable attractors, bringing concepts and tools from statistical and nonlinear physics into neuro- and information sciences. Closed loops existing between the talamus and cortex are involved in the genesis of the alpha waves. Two sorts of „slow“ ion channels could create oscillations in the membrane potential, which are at the begining of the internal genesis of mental objects and their linking together [2]. The convergence of two oscillations of given neuron results, if they were in phase, is an amplification [24] of the latent oscillations [25]. Groups of neurons in the brainstem extending a global influence due the divergent nature of their axons. Nuclei in the reticular formation regulate the transfer of sensory messages and participate in global forms of influence. A particular nucleus containing dopamine – the A 10 nucleus – plays an important role in this distribution of the converging influence [3].

This recognising actvitity represent the intelligence generator regulating the selective contact of the brain with the outside world in converging manner. The reticular activating system provide a background of excitatory impulses into the cerebral cortex, with an array of probabilistic quantal emissions that are targets for the quantum probabilistic fields of mental influence. The firing of neurones is modified by alterations of the probabilities of quantal emission of the synapses engaged in actively exciting them [26,27]. Changes in activity of a module means the changing probability of emission in thousands of active synapses. The Lyapunov energy function is organizing the convergence of the energy to information, and due this process is information probabilisticaly distributed in the brain as intelligence [28].

In the synchronization theory for oscillatory neural networks if the all neurons have equal frequencies ω1 =... ωn ,and the connection matrix C = (cij) is self-adjoint, i. e., cij = cji for all i and j , then the network always converges to an oscillatory phase-locked pattern, that is, the neurons oscillate with equal frequencies and constant, but not necesarily identical phases [29-31].There could be many such phase-locked patterns corresponding to many memorized images. The proof follows from the existenece of an orbital energy function

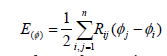

The Hoppensteadt and Izhikevich self-adjoint synaptic matrix arises naturally when one considers complex Hebbian learning rules [30]. If all oscillators have equal frequencies, i. e. ω1 =... =ωn , and the connections function Hij have pairwaise odd form, i. e., Hij −ψ = −Hij ψ for all i and j ,then the canonical phase model converges to a phase locked.pattern φit →ω1 t +φi for all i , so the neurons oscillate with equal frequencies ( 1 ω ), and constant phase relations φi t −φj t =ϕi −ϕj . In this sense the network dynamic is synchronized. There could be many stable synchronized patterns corresponding to many memorized images. [32-35]. The proof is based on the observation that the phase canonical model has the energy function .

where

Rij is the antiderrivative Hij Neural synchrony is a candidate for large-scale integration[36].

Distant functional areas mediated by neuronal groups oscillating

in specific frequency bands enter into precise phase-locking during

cognitive and emotional activity [21]. Phase-locking synchrony is

the relevant biological mechanism of brain integration [37]. The

stability of the coupling is estimated by quantifying the phaselocking

value (PLV) across the cycles of oscillation at the frequency

( f ) at any given time ( t ):

where

Rij is the antiderrivative Hij Neural synchrony is a candidate for large-scale integration[36].

Distant functional areas mediated by neuronal groups oscillating

in specific frequency bands enter into precise phase-locking during

cognitive and emotional activity [21]. Phase-locking synchrony is

the relevant biological mechanism of brain integration [37]. The

stability of the coupling is estimated by quantifying the phaselocking

value (PLV) across the cycles of oscillation at the frequency

( f ) at any given time ( t ):

Nonlinear systems are able to benefit from real chaos by using thermal noise in a highly constructive way, which may even increase order. Perception is a new emergent property, inherently connected with self-organization. The same class of dynamical processes, which allow the organism to maintain a dynamical equilibrium, also allow the system to perceive its environment. Any self-excited oscillator, for example our ear, performs some kind of reification, because it is able to perceive dynamical processes in an objectlike representation. To come to life, the self-excited systems must be driven to thermal non equilibrium[38-39]. From a dynamical systems view, the temporal self-structuring ability of self-sustaind oscillators is based on an interplay between the generator and resonator, two components governed by two different types of dynamical processes. The generator is a nonlinear, energetically active element. The resonator can be modelled as a linear energetically passive system ot two mutually connected degrees of freedom.

Through its negative resistance the generator process transfers energy from a steady energy source to the various oscillating modes of the resonator, which limit the energy flow through the system, allowing the oscillations to settle in a steady state, when the energy supply equals the losses [40]. The linear passive resonator process is governed by the superposition principle. The new effects occur due to the processes in generator [41]. There are nonlinear interactions between the external signal (frequency fe ) and the internal signal (frequency fi ). They depend on the frequency difference Δf = ( fe − fi) , the amplitude of the driving signal, and the degree of coupling [42]. In adition to phase locking and frequency pulling, further nonlinear effects can be noted. On both sides of the locking range new frequency components will appear. A damped harmonic oscillator follows passively a driving force, forgetting its own internal rhythm in the stady state [43]. Contrary to that, nonlinear autonomous oscillators in the phase-locked state actively follow the driving signal. The system has perceived the driving signal, when switching from the unlocked to phase-locked state.

The system changes the mode of behaviour, and the internal dynamical processes prefectly reconstruct the periodicity of the external signal. In the case of weak coupling its perceptions and actions reduce to a modulation of the internal rhythm, bringing about new combination products. Perception in the most basic psychological sense means active construction of internal representations. Perception is more than a passive process, perceptions and actions are interlocked [44]. They are closed to a perception-action loop. The generic nature of self-organization phenomena, even bridging the gap between the material and mental world.

Where “interactions with the environment” under everyday experience masks the quantum, there lies the heart of darkness – and there is a center of the glittering prize. John A. Wheeler There are another two principles mediating the interaction with environment. The first by Smolin is background independence. This principle says that the geometry of spacetime is not fixed. To find the geometry, we have to solve certain equations that include all effects of matter and energy [45].The second principle is diffeomorphism invariance. This principle implies that, unlike theories prior to general relativity, we are free to choose any set of coordinates to map spacetime and express the equations. A point in spacetime is defined only by what physically happens at it, not by ots location according to some special set of coordinates. Diffeomorphism invariance is very powerful and is of fundamental importance in [46]. Biological Effects of Torsion Fields are realized due the torsion fields as meta-stable states of spin polarized physical vacuum makes it possible to formulate principally new approach to the quantum (torsion) generators.

The Vacuum domains are acting trough a magnetic flux tube structures associated with the primary and secondary sensory organs which define define a reentry in hierarchy of sensory representations in the brain [47]. The GW is the place of coding by magnetic transition frequencies which generates sensory subselves, associates them with various sensory qualia and features, for example by quantum entaglement. Heaviside Electrodynamics (HEs) define this sensory projections and EEG HEs correspond to our level in hierarchy of projections [48].The sensory selves are of order HEs sizes (L(EEG) = c f (EEG)) . The pyramidal neurons contain magnetic crystals and haemoglobin molecules, which are magnetic. The part 1 s can be interpreted as possibly moving screen of GW, and part 2 s as screen sensory input i, projected and monitored by an external observer[23-24]. The work of the torsion generator is based as well on spin systems of physical vacuum and manifesting itself by torsion fields. This provides for the possibility of direct access of the operator to the processor without a translating periphery [49].

The operator can, on basis of arbitrarily chosen protocol, enter into such generator without any mediating apparatus, by way of direct interaction directly with the central processor through the channel of torsion exchange of information. The information on molecular level is encoded not only in the structure of molecules, but as well in the structure of the medium which surrounds it (environment) and this information is connected with spin polarization of physical vacuum, it means with torsion fields. We can propose that the brain as a functional structure of internal conscious representations includes a bio-computer, it means a Hopfield system, and its outside part - the torsion generator of the cerebellum interacting by the spin polarized physical vacuum in the space around the brain [50-51].

The holographic principle says that the laws of physics should be rewritten, in terms of the information as it evolves on the screen. Here information flows from event to event, and geometry is defined by the measure of the information capacity of a channel by which information is flowing [52]. It would be the area of a surface, so that somehow geometry that is space, would turn out to be some derived quantity. The area of some surface would turn out to be an approximate measure of the capacity of some channel in the world would fundamentally be information flow [53]. This is a transition to a computational metaphor in physics. For the screen as a surface is possible to use Mandelbrot´s fractals which behave as the dimensions of the structure greater than spatial dimension [54].Hausdorff-Bezikovich dimension of surface boundary is given by the formula:

where A(δ , E) .The another mode of computation of the screen as a surface is also possible [55]

The learning algorithm in biological systems is partly based on the negentropy maximization, different from the machine learning logic [56]. Shannon is also thinking in terms of information as a measure of unexpetedness (innovation) in his „A Mathematical Theory Of Communication“ (1948). There was proposed information n H as the propbability of unexpected, rare, unforseened, as the probability of unexpected ( i p ).

For a given question (Q constant) is possible to have different states of knowledge. Shannon defined the information in a message in the following way: A message produces a new X, which leads to a new assignment of probabilities, and to a new value of S (entropy). To obtain a measure of the information He proposed that the information (I) be defined by the difference the two uncertainties:

Information is always causing change in probabilities, which is changing the energy levels, and therefore by transitivity a mass do it also. Shannon´s measure of information as an invention, which due changing the amount of uncertainties is making external work. (complementarity of topology and probabilities, e. i geometry and information). The probability amplitudes of quantum mechanical processes must be complex. The Hilbert space need a complex probability amplitudes, when distinguishability between the two populations is measurred by the angle in Hilbert space between the two state vectors [57]. Any quantum evolution ot he two-state system can be thought also as a transformation of the surface of the pure states into the ellipsoid containt in the Bloch sphere. [58] The complex number r(t) can be expressed as the sum of the complex phase factors rotating with the frequencies given by differences j Δω between the energy eigenvalues of the interaction Hamiltonian, weighted with the probabilities of finding these energy eingenstates in the initial state:

The index j denotes partial energy eingenstates of the environment of the interaction Hamiltonian (tensor products of ↑ and ↓ states of the environmental spins) [59].

Contrary to the well-established models, we are proposing that the Wigner distribution can be regarded as a probability distribution for the unexpected, but really becoming events, because W(x, p) is real, and can be also negative [60]. A system described by this type of Wigner distribution is localized in both x and p , is leading to the expanded complex probabilities causing the high impact of pseudo-„unreasonable“ negentropy in biological (especially in brain-mind) processes:

If the system is more isolated, than it interacts with the apparatus only briefly. As a result of that interaction, the state of appartus becomes entangled with the state of the system:

This quantum correlation suffers from the basis ambiquity: The S – A entanglement implies that for any state of either of the two systems there exists a corresponding pure state of the other. The roles of the control system and of the target system (apparatus) can be reversed when the conjugate basis is selected. (Gazzaniga,1995) These ambiquities that exist for the SA pair can be removed by recognizing the role of environment [28].

For our representations the resonant amplification is expected to be of a crucial importance. [61,62].The requirement that the Maxwell Electrodynamics (ME) acts like a resonant wave cavity fixes the representations to a high degree. The fundamental frequency f = c / L of ME of length L equals to the magnetic transition frequency at the sensory organs:

This implies that various parts of the magnetosphere probably correspond to various EEG bands. For the personal magnetic canvas the condition L = kS , where the S is the transversal thickness of the magnetic flux tube paralell to ME, guaranties resonance condition for all points of ME. Continual transformation of magnetic energy to information (magnetometabolism), might be a key aspect of correlations between consciousness and the magnetosphere. [63] The cavity resonances associated with the spacetime sheet complex defined by Earth allow transversal communications and amplifications. Besides 7.8 Hz Schumann resonance associated with Earth, also the 40 Hz (14 Hz) resonance associated with Earth´s core of the Earth deserve to be mentioned since they are important EEG resonaces. Of course, also the harmonics of these resonaces are important too [13].

The Conscienece: a cryptic code of “Back Doors” influence functional in the cortex. It is also proven experimentally that the microlepton fields can be excited by electromagnetic field. The presence of a magnetic moment in microleptons causes polarization of the magnetic dipoles of the microleptons and that polarization causes the creation of standing waves (periodic space structures) in microlepton fields [64]. The microlepton fields can carry information and modulate magnetic moment. In general, there are evidences that microleptons can carry energy, impulses and information (Ochatrin, 1996). Our earth is part of a „sun-earthspace“ system, that is out of equilibrium. By providing a departure from equilibrium, the sun becomes a source of information and useful energy. Man´s use of energy on the earth´s surface constitutes internal transactions with energy fluxes that are thermodynamically usable [65].This negetropy flux derives from the energy flux from the sun to the earth. By storing the energy and negetropy in various systems, the earth creates subsystems out of equilibrium with the general environment. Both the energy flux and the negetropy flux at the earth´s surface are due to solar processes.

The maximum steady-state rate at which information can be used to affect physical processes is of the order of 1038 bits per second. The possible amplification of information-processing activities is much greater. [66]. The Influence Functional is defined as

where pε is the initial state of the environment and S =Sε[q,x] is the action of the environment (including interaction term with the system). If there is no interaction (or if two systems trajectories are the same, i. e. x = x1 ) , then the influence functional is equal to one. F[x = x1 ]is the so-called influence functional [67]. This functional is responsible for carrying all the effects produced by the environment on the evolution of the system. If there is no coupling between the system and environment, the Influence Functional is equal to the Identity. Calculating the Influence Functional for an environment by a set of independent oscillators coupled linearly to the system is a rather straightforward task [48]. The energy eigenstates are also selected by the environment [68].

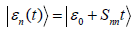

The decoherence in eigen basis can be established Πε is

a momentum operator (as the environment operator acts on the

environment as a translation generator), and the initial state of the

environment is a coherent vacuum state, the evolution turns out

to be such  , where Snn = ϕn| S| ϕn . Therefore the

overlap between the two states that correlate with different energy

eigenstates can be

, where Snn = ϕn| S| ϕn . Therefore the

overlap between the two states that correlate with different energy

eigenstates can be  . Consequently in

this case, there is einselection of energy eigenstates (superpositions

of energy eigenstates are degraded while pure energy eigenstates

are not affected). For this reason, pointer states are energy

eigenstates [69].

. Consequently in

this case, there is einselection of energy eigenstates (superpositions

of energy eigenstates are degraded while pure energy eigenstates

are not affected). For this reason, pointer states are energy

eigenstates [69].

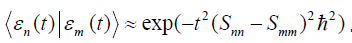

The decoherence functional is defined in terms of which the decoherence process determines the relative fitness“ of all possible superpositions that exist in Hilbert space [47]. The resulting natural selection“ is responsible for the emergence of classical reality[56]. In the GW its consequence is known as environment-induced superselection, or einselection. In a quantum chaotic system weakly coupled to the environment, the process of decoherence will compete with the tendency for coherent delocalization, which occurs on the characteristic timescale given by the Lyapunov exponent λ . Exponential instability would spread the wavepacket to the paradoxical size, but monitoring by the environment will attempt to limit its coherent extent by smoothing out interference fringes. The two process shall reach status quo when their rates are comparable:

Because the decoherence rate depends on δ x , this equation

can be solved for the critical, steady state coherence length, which

yields

All of the systems that are in principle quantum but sufficiently macroscopic will be forced to behave with classical mechanics as a result of the environment-induced superselection. This will be lc<< x , because lc is a measure of the resolution of „measurements“ carried out by the environment [70]. The basis-dependent direction of the information flow in a quantum c-not (or in pre measurement) is a direct consequence of complementarily. It can be summed up by stating that although the information about the observable with the Eigen states {| 0 ,| 1 } travels from the measured system to the apparatus, in the complementary { |+ |− }basis it seems to be the apparatus that is being measured by the system. This observation also clarifies the sense in which phases are inevitably disturbed: in measurements. They are not really destroyed, but, rather, as the apparatus measures a certain observable of the system, the system „measures“ the phases between the possible outcome states of the apparatus.

These phases in a macroscopic apparatus coupled to the environment are fluctuating rapidly and un controllably, thus leading to the destruction of phase coherence. However, even if this consequence of decoherence were somehow prevented (by perfectly isolating the apparatus pointer from the environment), preexisting phases between the outcome states of the apparatus would have to be known while, simultaneously, A is in |0 A , the „ready-to-measure-state“ [48].

There could be relevant to propose a possible new information channel in Universe, where the greater part of the potential energy of interactions is transformed to the spin[55]. The energy of gravispin waves, reaching the vacuum domain - VD from the absolute physical vacuum – APV, is transformed inside the VD into energy of electromagnetic waves. The energy of electromagnetic waves reaching the VD from the APV, on the other hand, is transformed inside the VD into energy of gravispin waves. In a gravitational field, a VD becomes both an electrical and a gravitational dipole; i.e., the VD in this case creates both electrical and gravitational fields inside and outside itself [71]. In a magnetic field, the VD becomes both a magnetic and a spin dipole; i.e., it creates both magnetic and spin fields inside and outside itself. In an electrical field, the VD becomes both an electrical and a gravitational dipole; i.e., it creates both electrical and gravitational fields. In a spin field, the VD becomes both a magnetic and a spin dipole; i.e., it creates both magnetic and spin fields. The VD functions simultaneously as a converter of energy and a transformer of two types of waves and four fields [16].

The VD introduces four polarizations and, consequently, four additional fields into a solid. Four tensors of striction stress are associated with the fields. The tensors of striction stress related to the gravitational and spin fields can be incorporated by analogy with the tensors related to the electrical and magnetic fields[72]. In addition, another tensor is related directly to the spin polarization: a nonsymmetrical tensor of tangential spin mechanical stresses [73]. All these stresses alter the initial stressed state of the solid, which is characterized by the tensor of initial mechanical stress The striction, spin and original mechanical stresses, normal and tangential, are summed up algebraically[74].

As it was stated above that for explaining electromagnetic radiation, especially the self-luminescence, it is necessary to assume that space is filled with gravispin waves with a high power flow density in any preselected direction. In this connection, the question arises: what and where are the sources of gravispin waves? In the study this question is considered in part by the use of closed chains of transformations of energy, which are included naturally in models of combined electro gravidynamics the non-homogeneous PV, specifically the mutually reversible transformations: electromagnetic energy to thermal (EM ⇔ T); electromagnetic energy to mechanical (EM ⇔ M); electromagnetic energy to gravispin (EM ⇔ GS); thermal energy to gravispin (T ⇔ GS); gravispin energy to mechanical (GS ⇔ M); mechanical energy to thermal (M ⇔ T). GS energy must be understood as three types of energy: gravitational field, spin field and the energy of gravispin waves. The EM energy also must be understood as three types of energy: electrical field, magnetic field and electromagnetic waves[76].

The model of a non-homogeneous PV brings up problems of the study of little-known transformations of energy: EM ⇔ GS; GS ⇔ T; and two particular transformations: GS ⇔ M, of spin energy to mechanical and the reverse, as well as of gravispin wave energy to mechanical and the reverse [75]. We examine transformations of energy indicated:

a) The transformation of the energy of gravispin waves to mechanical energy;

b) The transformation of heat to the energy of gravispin waves;

c) The reversible transformation of the energy of electromagnetic waves to the energy of gravispin waves.

The three latter transformations of energy are related to the reversed signs in front of the “sources” ρG, JG in the equations of Heaviside. First of the transformations of energy indicated that a gravity antenna in the form of a point body in accelerated movement is not an emitter (radiator) but an absorber (absorbent) of the energy of gravispin waves [77]. Interesting is situation with a transformation of the energy of gravispin waves into mechanical energy

Instead of the expected source of gravispin waves, we obtained an absorber of the waves. Outside sources of gravispin waves are required again for describing an absorber of energy on the condition that the principle of causation not be contradicted [24]. The second transformation of energy – a transformation of heat to the energy of gravispin waves that on the condition 2 σG σ −σ12 > 0 , the gravispin waves passing through a substance are strengthened due to the heat in the substance [78]. Third is transformation of electromagnetic waves into the energy of gravispin waves and vice versa – do sources of gravispin waves appear [79]. Despite the partial reversibility of the transformations M ⇔ EM, EM ⇔ T and M ⇔ T, the prevalent total energy flows are in the direction of thermal energy i.e., M→EM→T, M→T. A continuous increase in thermal energy occurs at the expense of mechanical and electromagnetic energy. This system includes the transformations of chemical and nuclear energy. Part of these types of energy is transformed into heat, while the mechanical and electromagnetic energy emerging due to the transformation of chemical and nuclear energy in the final analysis are also transformed into heat [80-82].

The prevalent energy flows form four energy cycles: T→GS→M→EM→T and T→GS→M→T, in which the VD-NSLF do not participate directly, and T→GS→EM→T and M→EM→GS→M. The energy transformations T→GS and GS→M are weak. This means that at the places in the Universe where intense energy processes take place with a significant growth in entropy, the reverse transformations associated with a decrease in entropy can remain unnoticed. The reverse energy transformations T→GS→M, however, are widespread in the Universe, due, in particular, to the exceptionally high penetrability of GS waves into matter.

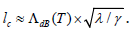

In electro gravimechanics, any system, including human brain, is a receiving gravispin antenna that absorbs the power of outside gravispin waves. This power increases the kinetic energy of movement in mechanical systems, but only very slightly. In normal mechanical systems, the loss of power related to friction covers the inflow of the power in question practically completely. The transformation of gravispin energy into mechanical energy can play a significant role [83].Problems of the transformation of gravispin energy into mechanical energy are similar to the problems of Heaviside. The formulae for the absorption of the energy of gravispin waves can be obtained from formulae of the emission of the energy of electromagnetic waves by substituting masses with a minus sign for electrical charges with a plus sign, the coefficient ε0 for ε0G (μ0 for μ0G), and –c for the speed of light c. As an example, one can obtain the formula for the transformation of gravispin energy into kinetic energy (power). We must use the Umov-Pointing theorem, integrating the Umov-Pointing vector with respect to the surface of a sphere of radius R → 0 seeing it as a point with a given mass.

We can use the expressions for electrical and magnetic fields of a point electron in accelerating movement, in which it is necessary to perform the substitutions indicated above. With such an approach, the gravitational field in a nonrelativistic approximation is expressed by the relationship (with r ≤ R → 0):

The spin field is expressed as follows:

(r is a vector-radius, the origin of which is located at a point; m is the mass; v and v are vectors of the velocity and acceleration).

In an approximation of circular orbits, where the acceleration is perpendicular to the velocity, the following expression for the power follows from:

(RC is the average distance).

The removal of heat from a substance by gravispin waves, in

the case of the APV, can be considered at ε1 = 0 and μ1 = 0, since  , but with σ σG ≅ σ12 . In the case of

monochromatic plane linearly polarized waves, electromagnetic and

gravispin fields can be represented by the following relationships:

, but with σ σG ≅ σ12 . In the case of

monochromatic plane linearly polarized waves, electromagnetic and

gravispin fields can be represented by the following relationships:

where ω is the angular frequency; s is a parameter with an inverse velocity dimension; iωs = γ is the wave propagation constant; i is the imaginary unit; E ,E ,H ,H z Gz y Gy are complex amplitudes of fields on the axes of y and z coordinates; x is the coordinate along which the waves propagate. The reversible transformation of the energy of electromagnetic waves into energy of gravispin waves inside the body of a vacuum domain:

According to the total power flow of electromagnetic and gravispin waves averaged over time has the same value in any section perpendicular to the x axis (in the plane yz), i.e.,

The expressions for fields represent a uniform solution for both electromagnetic and gravispin waves. This solution demonstrates that the energy of an electromagnetic wave passes into the energy of a gravispin wave, and vice versa. (Benaceraff, 1964) The period for full transformation of the energy of an electromagnetic wave into the energy of a gravispin wave and back is expressed by the relationship

where λ is the electromagnetic wave length. The PV is characterized by four polarizations: PM is the density of the magnetic moments of PV; PG is density of the gravitational dipoles of PV; PS is the density of the spin moments (spins) of PV or the density of the angular momentum of PV. According to polarizations PE and PM are related as well as PG and PS polarizations, but the two pairs of polarizations PE PM and PG PS are completely independent of each other [46,84].

Since PV quarks have electrical charges and mass, magnetic and spin moments, electrical and gravitational polarizations can be related in PV as well as magnet and spin polarizations. This connection between PV polarizations can be introduced to the Heaviside’s vacuum equations.

A solution of this problem: the connection between electric and gravitational, magnetic and spin polarization was assumed to exist only in the local areas. So in PV theory an object has appeared that was called VD[85] .The mathematical model of the vacuum domains based on the discussed notions can be presented as follows:

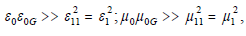

where E, EG are electric and gravitational fields in the moving bases. Inside the domains the energy conservation law, the law of the conservation of momentum and the law of the conservation of the impulse are observed. Self-luminescence of vacuum domains takes place because gravispin waves energy is transformed into electromagnetic waves energy. The complete conversion of the energy of one type of waves into the other takes place inside VD at the distance

(l is wave length; ae=e1 /(e0e0G)-1/2; am=m1 /(m0m0G)-1/2 (ae, am£ 1).

The domains magnetic field is connected with the polarization of VD that arrise as a result of the effect of the Earth fields. For the spherical domain these polarizations are expressed as follows:

a) electrical polarization of the domain

b) gravitational polarization of the domain

d) magnetic polarization of the domain

f) spin polarization of the domains

(E0 is electric field (130 V/m); E0G = gravitational field (9,83 m/ s2); H0 is magnetic field (19,5 A/m); H0S = spin field (1013kg/m×) of the Earth). VD penetrates into the matter, it acquires the electric monocharge

When VD penetrates into the matter with this monocharge it causes the electric current of the free electric charges that aim at the neutralization of the monocharge. This current is responsible for the liberation of the heat energy.

(r’=-ae×h0 -1rG ; R is domain radius).

On the possible Conversion of Mass, Energy and Information: Skopec’s Replacement function:

At the speed different from c there is transformation of energy quantum to the information qunta,(where I = information, W= work, Q = energy, S = entropy, p = probability, ln, or e = the natural logarithm, etc.).

There is a transformation between thermal and mechanical energy:

Due to our replacement function we have proved that the Atoms of Space and Time are the basic quanta’s of the Information too.

E = h.v ,

It means that conversion of Energy quanta’s into Information quanta’s physically are, and technically will be possible [86].

The torsion effects of VD could act through the termal excitations of the field ϕ . In case of the density matrix p(x, x1 ) of the particle in the position we can write down according to the master equation:

( H = a particle´s Hamiltonian,γ =relaxation rate, B k = the

Boltzmann constatnt, T = the temperature of the field) [87-88]. We

have an n-parametric simple transitive group (group n T ) operation

in the manifol f

ab C are structural constants of the group that obey

the Jacoby identity. The vector field ξbj is said to be infinitesimal generators of the group. We see that  ,the components of

the anholonomity object (Ricci´s torsion) of a homogenous space

of absolute parallelism are constant. It is easily seen that the

connection possesses torsion. In this case

,the components of

the anholonomity object (Ricci´s torsion) of a homogenous space

of absolute parallelism are constant. It is easily seen that the

connection possesses torsion. In this case

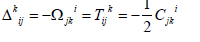

where -1/2Cjki is the torsion of absolute parallelism space. It was exactly in this manner that Cartan and Schouten introduced connection with torsion

where Sijk is the Cartan´s torsion of the Riemann-Cartan space. The Ricci´s and Cartan´s torsions have the same symmetry properties, but Ricci´s torsion depends on angular coordinates unlike Cartan´s one. Moreover, Ricci´s torsion defines the rotational Killing-Cartan metric, but Cartan´s not at all [88,89].

Marlin use the naive Bayes classifier can be compactly represented as a Bayesian network with random variables corresponding to the class label C ,and the components of the input vector X1...XM .The Bayesian network reveals the primary modelling assumption present in the naive Bayes classifier: the input attributes Xj are independent given value of the class label C . A naïve Bayes classifier requires learning values for P(C = c) , the prior probability that the class label C takes value c , and P( Xj = x |C = c ),the probability that input feature j X takes value x given the value of the class label isC = c [55]. As perception feature selection filter is the naïve Bayesian classifier based on the empirical mutual information between the class variable and each attribute variable. The mutual information is computed during the learning process learning [69].

To predict the value of ray given the profile r a of a particular active user a we apply a slightly modified prediction rule to allow for missing values. The prediction rule is

I tis well-established that the population dynamics is using an ensemble approach. The population thinking is the essence of Darwinism [90]. An ensemble is represented by a cloud of points in phase space. The cloud is described by a function p(q, p,t) , which is the probability of finding at a time t , a point in the region of phase space around the point q, p. A trajectory corresponds p vanishing everywhere except at the point q0,p0 . Function that have the property of vanishing everywhere except a single point, are so called Dirac delta functions. The function 0 ∂(x − x ) is vanishing for all points x ≠ x0 . The distribution function p get the form:

The Ricci flow equation used by Richard Hamilton is the evolution equation,

for a riemannian metric gij(t) . It is proved that Ricci flow preserves the positivity of the Ricci tensor in dimension three and of curvature operator in all dimensions, and the eigenvalues of the Ricci tensor three and curvature operator in dimension four are getting pinched pointwisely as the curvature is larging. This is proving the convergence results, the curvature converge modulo scaling to metrics of constant positive curvature. Hamilton proved a converegence theorem in which such scallings smootly converges modulodiffeomorphisms to a complete solution to the Ricci flow. The Ricci flow in quntum field theory is a approximation to the renormalization group (RG) for the two-dimensional nonlinear model. The connection between the Ricci flow and the RG flow show that the flow must be gradient-like [87]. The lens spaces with finite cyclic fundamental group constitute a subfamily classified up to piecewise-linear homeomorphism.The Ricci flow equation led to the sphere collapses to a point in finite time. The theorem of Hamilton is saying that, if we rescale the metric so that the volume remains constant, then it converges towards a manifold of constant positive curvature. Grigory Perelman is pointed that one way in which singularities may arise during the Ricci flow is that a 2-sphere in M 3 may collapse to a point in finite time. By using his approach „surgery“ on the manifold is to stabilise the convergence process [86].

A complete description of a microstate is the state function (ψ - function) which modulo square is a probability density. I t presents itself the result of interaction of ψ - functions of single particles. It is possible to increase the probaility of macrostate only by re-normalization of probabilities of both microstates. The living macrostates of the biological system realizing the corresponding microstates by means of re-normalization of probabilities, its mode can shift to the tail of the distribution. It is a result of multiple interactions of the initial, a priori function of probabilities distribution for all possible microstates, which are in complicated bio-systems not equally probble. The probability filter by means of which the macrostates performs a determinance [37]. The Bayes formula can be used as a mathematical model:

where p(μ / y) is distribution function of the living state in the ensemble μ a result of the probability filter effect, k is the constant from the conditions of normalizing, p (μ ) is the a priori function of the ensemble μ , and p (y /μ ) is the distribution function diclosing stetes of the ensemble μ , the probability filter y selects as living ones.

By calculating statistical wights of the states, we must not sum up the probabilities of alterntives microsttes, but multiply conditional probabilities of all succession microstates composing the tickness of the given macrostate. The multiplication led to the statistical values les then one, and to negative value of entropy. N. Kobozev proposed to name this process as „anti-entropy“ [91].The re-normalization is a constant outer interference, from macrostate having his image, into the natural dynamics of microtates. The element of „miracle“, is the discrepancy between observed process at a microlevel for the second law of thermodynamic an uncertainity of the future is introduced into the natural dynamics. The measure of this „miracle“ element is anti-entropy, and the renormalization o the probailiies is the mechanism of anti-entropy origination.Appearence of the living statesis only in biological systems probable. The biosystems are aranging a re-normalization of probabilities of the states determined by higher structural level. The re-normalizatio of probabilities is characteristic for all levels of the biological hierarchy. The Bayes equation is the mathematical description of re-normalization of probabilies.

A Bayes approach is a theory reflecting that from the certain level of life the re-normalization of probabilities is realizing the function of the probability filter. This re-normalization of probabilities at ll levls from a cell up to the biosphere is reduced to a multiplicative interaction of the functions o states for corresponding macro- and microlevels. The probability amplitudes:

Where u1 u2 are the wave functions, and ψ = c1 u1 +c2 u2 .

The duble product is the interference term, and the human itelligence is generated by convergence of the energy function – Lyapunov function and the interference term.The brain is functioning like a mathematical generator of intelligence. We can see the brain as a biocomputer generating consciousness and antientropy, or the brain as a biogenerator of consciousness and antientropy.

“You can´t take the problem seriously, unless you have a solution to propose”. Secretary of State George Marshall. The Neurobiology is achieving a lot of success in describing the path of the stimulus in the organism. But for us is this research a little bit one-sided, concentrating only to one way of getting information about the working of the brain. We believe, as it has pointed the Nobelist Sir John Eccles, that brain has a structure of maximal complexity, and complexity is possible to study by part after part, but to understand to this complexity, is possible only with multiple of complex approach. Today we preferring this one-sided empirical methods, but they are not reflecting enough the functions of interactive systems influencing one to another at the complex level between organism, brain, and their environment. Knowing perfectly the parts of the system is not enough, because the process of interaction is transforming the microstates of the system. This reductionist approach has had a very successful history with the discovery of many fundamental principles concerning the parts, which go to make up a whole living organism. Most recently one only needs to look at the achievements of molecular biology.

But it is becoming clear, however, that there are some fundamental aspects of life and brain, which cannot be understood from reductionist point of view. In the living state the parts: the molecules, cells, and tissues are never isolated, and are not free to function separately, but rather co-ordinate their activities, to generate a unique state of dynamic order which is not found in any non-living system. Thousands of reactions are going on simultaneously in living systems, to constitute the perfectly organized fluctuations of life. Precies co-ordination is needed at each of number of hierarchical levels: electronic, cellular, molecular, all the way to the whole organism. However, most of the experimental methods used in contemporary neuro sciences are not suited to study these co-operative mechanisms. And so they have until recently been mostly ignored. The alternative requires to understanding the brain as an interactive system such that will respond as integrated system to any stimulus. The Brain is open system, which continually exchange energy and information with the environment. Because of its extreme sensitivity, which can be understood in terms of non-linear dynamics, can respond to a small input of energy, but an enormous amount of information.

Whatever power we have, the outcome could be influenced only in partial manner. As it pointed J. A. Wheeler, the experimenter decides what feature of the electron he will measure, but “nature” decides what the magnitude of the measured quantity will be. The complementarily is the deepest-reaching insight that we have ever won into workings of existence. Not size nor nature of the object studied, but its interaction with the environment. How “interaction with the environment” under everyday situation masks the quantum, with influence of decoherence. There lies the heart of darkness, and there is the center of the glittering prize (in Cabala reality is a mirror of the interractions with the environment). Neuronal growth, projection, synaptic transmission, are shaped by a process of Darwinian selection. If we combine this facts with the observation that functional maps of the brain display differences in the projection areas of specific afferent stimuli at different times, we begin to see brain circuitry in a new way. An afferent stimulus may select receptors that are most responsive at the moment of its arrival. A neurons responsiveness depends on its past functional history, how intensely it has been stimulated, by what kinds of afferent axons, and if it facilitates reception of broadcasts, from its afferent synaptic terminals, etc.

The phenomenon of memory is not only reproduction of facts, but as it pointed by Euler, there is playing a deciding role the active re-construction incoming stimulus, by the highly individualized, subjective categorization of sensory details. As Edelman theories convey, each brain is unique, its perceptions are its own creations, and its memories a personalized synthesis of these perceptions. The individuality is created in ontogenesis by a process of Darwinian selection. The intelligence is an ability acquired by each brain to categorize in a manner that facilitates creative associations when information is retrieved. The information coded in DNA are itself not fulfilling all the conditions needed for individual development, there are also other factors, other conditions realized by re-entry of interactions with environment. There is a lot of influence regulated not by DNA, not only by biological mechanisms, but also by physical, mathematical, probabilistic, chemical, gravi-spin, or psychological mechanisms of the re-entry. Also re-entry between the cortex and the limbic system does not always interacting in the same way. Resonance between rational concepts is pleasurable. As Blaise Pascal said, “the heart has its reasons that reason not know”.

The limbic system and the hypothalamus (together the “heart”) have enough autonomy vis-á-vis the cortex that, under the pressure of particularly strong sensory stimulation, motivation may increase to such extent, that the subject “goes into the action” even if the cortical resonance (“reason”) says “no” to the act in question [2]. The recognizing activity represents a “generator of intelligence”, due the anti-entropic selection, by the comparison of mental objects in terms of their resonance or dissonance. Influence functional may include also some form of “Higher Intelligence” as a strange attractor behind the scene, like the Programmer of the Universe Software (“God”). But we are not able to “read in His Mind” because of Gödels Theorem of Uncompletness. The Lyapunov energy function is organizing the conversion of the energy into information. The Probability Re-normalization is increasing the external work of the new information, causing also topological changes (torsions) in the system. Changes in the topology makes possible the effect of the grawispin powers penetrating into the matter.