Abstract

Author reviewed new method in the field of neurodynamic modeling. The considered modeling method provided researchers with a set of neural network simulators that can greatly simplify research in the field of neuroscience. In addition, pulsed neural networks process information in a similar way to biological nervous systems (neurons transmit information using short pulses - spikes) and are therefore also interesting from a fundamental point of view. The development of hardware implementations of pulsed neuromorphic networks is of interest due to the potentially higher data processing speed than traditional implementations based on non von-Neumann architecture. In one of these implementations, each neuron integrates the input pulses considering the weights of the synapses over which they arrived, and if the obtained value exceeds a certain threshold value, it generates an output pulse. Such an impulse is applied both to the inputs of other neurons and back to the input synapses, providing the ability to adjust their weights, for example, according to Hebb’s rules or their varieties - STDP rules (spike-timing dependent-plasticity - plasticity, depending on the time of spike). It is necessary to realize such plasticity in organic memristors, for an example, based on polyaniline, promising for use as synaptic connections in hardware implementations of neuromorphic networks.

Keywords: STDP rules; Non-Von-Neumann Architecture; Organic Memristors; Massive Multi-Thread Processor

Introduction

The current stage of development of science and technology is accompanied by the intensive introduction of new information technologies in all spheres of human activity, and today it is impossible to imagine research in various fields of science without the use of advanced computer systems [1,2]. Due to the continuous process of improvement, the resources of modern information and computer technology have increased many times and make it possible to solve problems of such complexity that in the recent past seemed unrealizable, for example, computer modeling of multidimensional systems. Modeling is one of the most effective methods of researching field, the task of which is to build and analyze models of real objects, study processes or phenomena that occur in the systems in question, in order to identify the mechanisms of their functioning, as well as to predict the phenomena of interest to the researcher. A model allows us to overcome the limitations and difficulties that arise when setting up a laboratory experiment, due to the possibility of conducting so-called numerical experiments, and to study the response of the system under study to changes in its parameters and initial conditions. In this regard, computer simulation is widely used in all-natural sciences. Neuroscience (or the science of the brain), the task of which is to study the functioning of the brain and nervous system was no exception.

The Main Modern Works in the Field of Modeling Brain Functions

The approach to the researching of the brain of mammals using computer simulation is one of the most promising today. The following are the most significant results:

a) Мodel of visual attention (Silvia Corchs, Gustavo Deco). The model consists of interconnected modules that can be connected by different areas of the dorsal and ventral paths of the visual cortex.

b) Model II / III layers of the neocortex (Mikael Djurfeldt, Mikael Lundqvist)

c) This model was implemented on a Blue Gene / L supercomputer. It includes 22 million neurons, 11 billion synapses and corresponds to the cerebral cortex small mammal. d) Self-sustaining irregular activity in a large-scale model of the hippocampal region (Ruggero Scorcioni, David J. Hamilton and Giorgio A. Ascoli).

e) The model consists of 16 types of neurons and 200,000 neurons. The number of neurons and their connections correspond to the anatomy of the rat brain. In the project, the authors analyze the emerging activity of the network and the effect on it of a decrease in the size or relationships of the network model.

f) Blue Brain Project (Markram H. et al.)

g) Model of the mammalian thalamocortical system (E.M. Izhikevich and G.M. Edelman).

Let us dwell on some of them in more detail.

Blue Brain Simulation Project

The Blue Brain project started on July 1, 2005 as a result of a collaboration between the Swiss Federal Technical Institute of Lausanne (École Polytechnique Fédérale de Lausanne - EPFL) and IBM Corporation. The aim of the project is a detailed modeling of individual neurons and the typical columns of the neocortex of the brain formed by them - neocortical columns [3]. The neocortex is located in the upper layer of the cerebral hemispheres, has a thickness of 2-4 mm and is responsible for higher nervous functions - sensory perception, execution of motor commands, spatial orientation, conscious thinking and, in people, speech. Humans brain has six horizontal layers of neurons that differ in type and nature of connections. Vertically, neurons are combined into so-called cortex columns, the functioning of which represents the basis for cognitive and sensory processing of information in the brain. Each such column contains about 10 thousand neurons with a complex but ordered structure of communication between them and with external, in relation to the column, neurogroups. The basis for constructing a biologically relevant model was data on the morphology and dynamics of activity of rat neurons and data on the physiology of the neuron obtained over the past decades of nerve cell research. According to these data, the positions of various types of cells in space, their detailed morphology and architecture of intercellular interactions were recreated on a supercomputer. To calculate the evolution of the model, the NEURON simulator was used, containing implementations of models whose parameters were adjusted to fit experimental data [4].

Method was proposed for automatically adjusting the parameters of compartmental models of neurons by large sets of experimental responses in the work. In the framework of this project, the neuron model considers the differences between the types of neurons, the spatial geometry of the neurons, and the distribution of ion channels along the surface of the cell membrane. The developers of the model note that a variety of types of neurons combined into a neurogroup is very important for the implementation of the cognitive functions of this group, with each type of neuron present in certain layers columns, and the spatial arrangement, density and distribution volume of neurons of various types serve as the basis for the orderly distribution of activity over the network in whole. The model also considers that the exact shape and structure of a neuron affects its electrical properties and the ability to connect with other neurons, and the electrical properties of a neuron are determined by the variety of ion channels. To simulate a neural network, the project uses the Blue Gene supercomputer, which allows calculating the distribution of electrical activity inside neocortical column in real time. In case of its successful implementation, fundamentally new class of artificial cognitive systems will be born, a new paradigm of computational architecture with numerous practical applications in all areas of human activity [5,6]. However, model processes considering the adaptability and plasticity of hundreds of millions of neurons, fundamentally new supercomputers with Petabytes of data, a globally addressable memory and massive multithread architecture will be required. It is necessary to support a revolutionary direction in the development of supercomputer technologies.

Izhikevich’s Computer Model of Brain

One of the most striking projects on Large-scale modeling of the brain was carried out by Eugene Izhikevich and Gerald Edelman. They built a computer model of the thalamocortical mammalian system based on data on the human brain. As noted earlier, modeling such complex objects as the brain requires the use of high-performance computer systems. In this project, a computational cluster (a group of computers, united by high-speed communication channels and representing from the point of view of the user a single hardware resource) Beowulf, consisting of widely common hardware running an operating system distributed with source codes. A feature of such a cluster is scalability, that is, the possibility of increasing the number of system nodes in order to increase the performance of a computer. Nodes in a cluster can be any commercially available stand-alone computers. The Beowulf cluster consisted of 60 nodes with a 3GHz processor and 1.5GB of RAM on each. To create a computer model, we used the C programming language and MPI technology, which allows us to distribute processing data between compute nodes [7,8]. Нighly detailed model that needed such computational power to calculate simulates the work of a million spike neurons, which are calibrated to repeat the behavior of known types of neurons observed in vitro in the rat brain. To set the position of model cells, we used experimental data on the coordinates of human brain cells. In the modeling process, 22 types of neural cells were used, which are obtained by changing the parameters of the Izhikevich’s model.

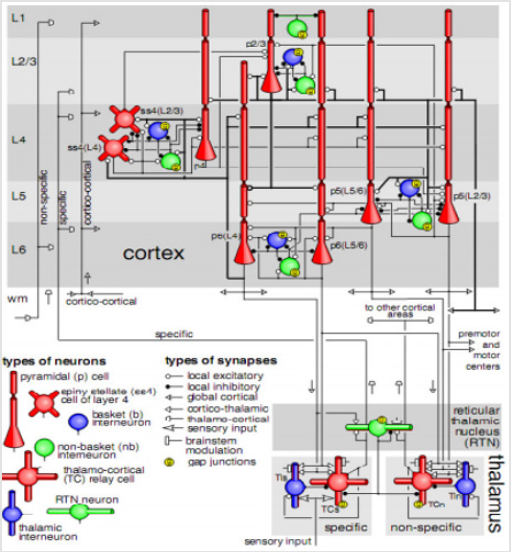

Depending on the morphology and target level, 8 types of exciting neurons were distinguished among model neurons [p2 / 3, ss4 (L4), ss4 (L2 / 3), p4, p5 (L2 / 3), p5 (L5 / 6), p6 (L4), p6 (L5 / 6)] and 9 types of brake [nb1, nb2 / 3, b2 / 3, nb4, b4, nb5, b5, nb6, b6]. Almost half a billion synapses with the corresponding receptors, short-term synaptic plasticity, and long-term STDP ductility. For computational performance reasons, the density of model neurons and synapses per square millimeter surface has been reduced. Therefore, model neurons had fewer synapses and less detailed dendritic trees compared to real neurons. To initialize the model, it took about 10 minutes and 1 minute each to calculate 1 second in the model (a submillisecond time step was used). The model reproduces macroscopic modes characteristic of ordinary brain activity, arising spontaneously as a result of interactions between anatomical and dynamic processes. Describes spontaneous activity, sensitivity to changes in individual neurons, the appearance of waves and rhythms, connection functionality in various scales. A simplified diagram of the microcircuit structure of the laminar cortex (top) and thalamic nuclei (bottom). The scheme is a six-layer structure and contains thalamic nuclei. The diagram is based on in vitro studies of cat visual cortex neurons. Diagram on Figure 1 is taken from [9]. To understand the fullness of the picture, it is necessary to solve Data intensive tasks (socalled DIS-tasks) on computers with a new electronic base and non von-Neumann architecture. Classical superclusters are not suitable for breakthrough trends in modern science. The process of processing information in pulsed neuromorphic networks has potential advantages over formal (stationary) neural networks: the ability to use local rules for training weights of the connections of neighboring neurons, more flexible adaptation to time-varying input data and lower power consumption.

Figure 1: A simplified diagram of the microcircuit structure of the laminar cortex (above) and thalamus nuclei (below). The scheme is a six-layer structure and contains thalamic nuclei. The diagram is based on in vitro neuron studies. cat’s visual cortex.

Conclusion

The brain is the hardest object consisting of a large number of different types of cells, including the main signaling cells - neurons (cells that generate and transmit electrical pulses capable of forming networks through contacts called synapses), glial cells that regulate metabolism, blood vessel cells, etc. Modeling such systems, complex in internal connections and large in the number of elements, using modern personal computers is extremely difficult, due to the large computing capacity of the resulting models. However, using supercomputer technologies eliminates this obsta cle and allows the use of a greater variety of modeling methods. One such method is the modeling of multidimensional systems (in English large-scale modeling). Large-scale modeling is one of the areas of supercomputer modeling designed to develop and conduct numerical experiments with global computer models of multidimensional systems in which macro and micro models that simulate interconnected functioning are integrated multi-level systems. This direction arose relatively recently due to significant progress in the technology of manufacturing microcircuits parallel computing, and the increased computing power of supercomputer systems, made available with the advent of specialized software. Large-scale modeling is based on the principle of hierarchical reduction, which assumes that any complex system consists of hierarchically subordinate subsystems (levels of organization). A high-level organization system consists of systems of a lower level, and the totality of systems of low levels of organization forms a system of a higher level. Application of this principle to modeling in neuroscience allows us to represent the brain in the form of several interacting independently described subsystems. The hierarchy of the model allows you to achieve the level of detail required by research, due to increase or decrease the number of levels of organization considered. However, with an increase in the number of levels of organization, the number of parameters describing the system, increases, which greatly complicates the task of creating a realistic model that reproduces the phenomena observed in a laboratory experiment. Increasing in the number of model parameters leads to an increasing in the amount of input data required for their definitions, data that are difficult to measure and often do not have a sufficient degree of accuracy. In this regard, abstraction is used when creating models - an approach that allows you to discard parameters that are unimportant for research in the framework of the task, with the aim of solving which the model was developed.

Thus, the task of abstracting is to preserve only what is important for the construction and analysis of models at different levels of the organization without losing the convenience of manipulation. The considered modeling method provided researchers with a set of neural network simulators that can greatly simplify research in the field of neuroscience. In addition, pulsed neural networks process information in a similar way to biological nervous systems (neurons transmit information using short pulses - spikes) and are therefore also interesting from a fundamental point of view. The development of hardware implementations of pulsed neuromorphic networks is of interest due to the potentially higher data processing speed than traditional implementations based on non von-Neumann architecture. In one of these implementations, each neuron integrates the input pulses considering the weights of the synapses over which they arrived, and if the obtained value exceeds a certain threshold value, it generates an output pulse. Such an impulse is applied both to the inputs of other neurons and back to the input synapses, providing the ability to adjust their weights, for example, according to Hebb’s rules or their varieties - STDP rules (spike-timing dependent-plasticity - plasticity, depending on the time of spike). It is necessary to realize such plasticity in organic memristors, for a example, based on polyaniline, promising for use as synaptic connections in hardware implementations of neuromorphic networks.

References

- Hammarlund P, Ekeberg O (1998) Large neural network simulations on multiple hardware platforms. Journal of Computational Neuroscience 5(4): 443-459.

- Shahaf G, Eytan D, Gal A, Kermany E, Lyakhov V, et al. (2008) Order-based representation in random networks of cortical neurons. PLoS computational biology 4(11): e1000228.

- Bakkum DJ, Guy Ben Ary, Phil Gamblen, Thomas B De Marse, Steve M Potter, et al. (2004) Removing some “A” from AI: Embodied Cultured Networks. Microscope pp: 130-145.

- Abeles M (1991) Corticonics: Neural Circuits of the Cerebral Cortex. Cambridge University Press P: 296.

- Edelman GM (1993) Neural Darwinism: selection and reentrant signaling in higher brain function. Neuron 10(2): 115-125.

- Diesmann M, Gewaltig MO, Aertsen A (1999) Stable propagation of synchronous spiking in cortical neural networks. Nature 402(6761): 529-533.

- Nádasdy Z, Hajime Hirase, András Czurkó, Jozsef Csicsvari, György Buzsáki, et al. (1999) Replay and time compression of recurring spike sequences in the hippocampus. The Journal of neuroscience : the official journal of the Society for Neuroscience 19(21): 9497-9507.

- Ikegaya Y (2007) Synfire Chains and Cortical Songs : Temporal Modules of Cortical Activity. Science 559(2004): 559-564.

- Izhikevich EM, Edelman GM (2008) Large-scale model of mammalian thalamocortical systems. Proceedings of the National Academy of Sciences of the United States of America. 105(9): 3593-3598.

Mini Review

Mini Review